TLDR: Don’t give a toddler a flamethrower.

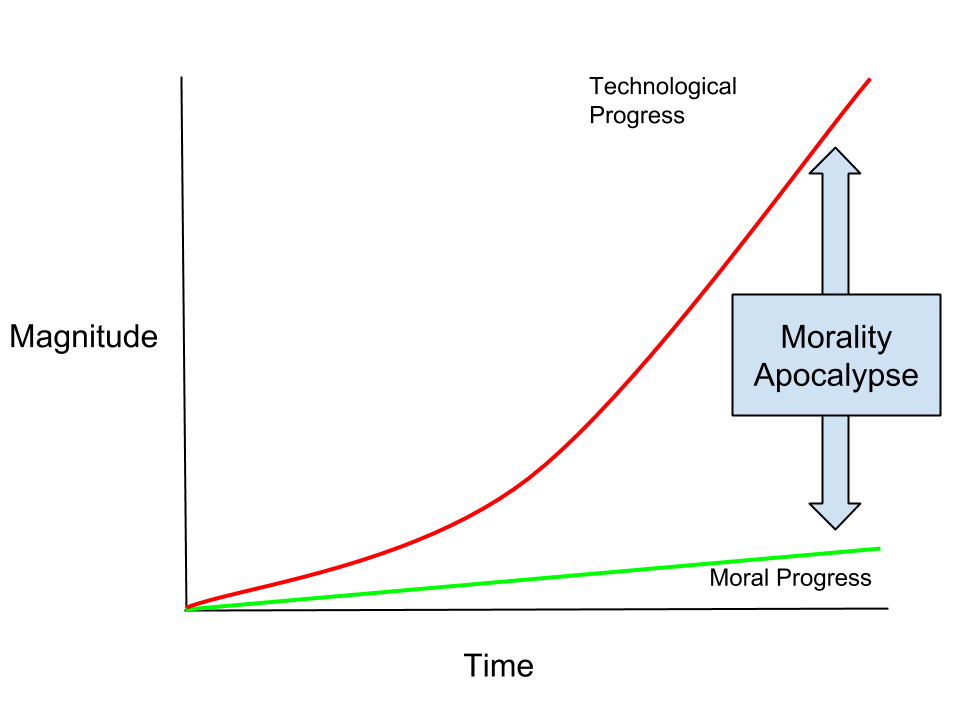

In more detail: The letter makes an argument for allocating more funds towards solving less immediate causes of human suffering. In particular, one emerging problem is that we as a species may face a morality apocalypse: Our species’ level of moral responsibility may become laughably insufficient to manage increasingly powerful technologies. We may become like a toddler with a flamethrower, resulting in suffering on a massive scale.

More detail yet: Technology allows us to impact the world more drastically, and can as easily be used for good as it can for evil. Technology is growing at an accelerating rate, while moral progress is plodding. Already our technological power outstrips our ability to use it responsibly (e.g. are we morally developed enough as a species to be entrusted with nuclear weapons?). A mistake would be to view morality as a fixed part of the human condition — there may be technological ways to enhance empathy or decrease our species’ tendency towards greed, revenge, and moral flexibility under duress. Without intervention to remedy our morality (perhaps through technological means), humanity may be at significant risk for horrific outcomes as our technical abilities more drastically eclipse our moral ones.

Dear Mr. and Mrs. Gates,

You are a smart and generous couple who have impacted the world for the better with earnest good intention. I am glad there are wise people like you, who strive to reduce human suffering. Although no doubt you have both given much thought to how you charitably spend, please allow me gently to appeal to your intellects and argue for increased focus on problems far less immediate, but perhaps more fundamental and pervasive to the human condition. In my mind, the central approaching dilemma of our age is that technological development has far outpaced moral development and seemingly will continue to do so without intervention. As a result, I believe it is possible we may soon approach a cross-roads that if not carefully navigated may wholly undermine our potential as a species to happily flourish and survive: a potential morality apocalypse.

First let me reiterate that the world needs more people like you who aim to eliminate absurd deaths and needless pain. It is easy for most of us to ignore suffering, to instead allow the minutiae of each of our small but worthwhile lives to monopolize our focus. Few consistently raise their focus from the petulant stream of day-to-day concerns; few ask how they can remedy in any serious way the vast inequity resulting from the dumb lottery of life. What you are doing is an honest and good thing, which I do not criticize. My only aim is to illuminate the possibility that perhaps greater good, perhaps even humanity’s escape from possible extinction, could be brought about through thoughtful reallocation of funds.

There are many reasons for the pain and suffering that exist in the world. One can imagine such reasons laid out in order from more immediate causes (e.g. starvation and sickness) to those more fundamental but abstract (e.g. tendencies for power structures and greed to subvert human morality). All things being equal, a fundamental solution is preferable to a more immediate one, because in the long term it provides greater leverage. That is, we know a disease is better cured when its causes rather than its symptoms are alleviated.

However, it is far better to treat symptoms than to do nothing at all, or to mistakenly treat false causes as if they were real. Worse, historically many have caused additional harm even as they aim genuinely to help. The vast potential for such delusion is reason enough to focus on concrete and immediate causes of suffering: One can see and be sure of the real impact of one’s investment. There is little risk of causing harm. It is difficult to argue with spending money to make people’s lives better; and there is no doubt that you have indeed made the world a better place for many.

On the other hand, the burden of the intelligent and caring with ample resources is to strive to improve the world — not only for the world of our children, but for their grandchildren and those beyond. This is the legacy we leave behind. In adopting such a long-term perspective, there is cause to wonder whether over the course of many years the human race will not revisit its inequities in different forms; why is it that curable diseases require philanthropy to remedy in some locales in the first place? One might ask also if over time the species is not in increasing danger of exterminating itself — that is, weapons are increasingly powerful but human morality and reasoning remains flawed. Seen this way, reducing the risk of future inequity or of catastrophic outcomes might trump the most immediate improvement of life. Still, even if one believes this argument, the question remains what is to be done about it?

While I believe that the fundamental underlying problem is clear to identify, unfortunately it not easy to solve. In particular, there is a constant factor underlying humanity’s failure to rise above tribal differences, one that may significantly undermine reaching our potential as a kind and caring species: The wide variance in our morality. This factor in itself may be unsurprising, as it has been with us throughout our history, but the danger from it is compounding in the modern age. Put simply, we have entered an age in which our technology develops much more quickly than does our morality.

While morality has developed, it is a slow plodding cultural change over generations. And despite cultural progress many fundamental human imperfections remain systemically ingrained, due to the nature of our own brains, which are biologically biased towards selfish and short-sighted behaviors unsuited for the modern world. That is, the genetic basis of human behaviors are adapted to a context more brutal and primitive, and have been tinkered from simpler brains through millenia of natural evolution. The end effect is a layering of the most human aspects of ourselves upon baser animalistic drives.

Now, I do not deny that technological growth has made great things possible for humanity. Diseases once death sentences have become mere annoyances, and travel requiring months of danger now can be completed affordably in hours. There is nothing inherently wrong with accelerating technological progress. However, advancing technology also requires increased responsibility. For example, technology has enabled for the first time a capacity for a single action (a command to launch missiles) to decimate the planet. In other words, technology simply increases what is possible, without any morality of its own. The problem is that because technological growth has so soundly outpaced growth of morality, we as a species have become unworthy of the terrible responsibility of what our technology makes possible: Fallible politicians hold the world’s fate in their hands. Thus without significant moral development, the outlook of our species appears grim. As technology surges forward, ever expanding the possible manipulations of nature, it increases in parallel the magnitude of evil a misguided person, group, or nation may inflict.

From reading classic literature, it is clear that there is some understanding of our moral limitations as a species, of the strange human condition as a whole, and of the relative fixedness of that human condition over time. Adultery undid good men as often a hundred years ago as it does now; murderous passion and revenge are as relevant as they ever were. Of course we remain as capable as ever of great love and kindness, but our capacity for tribalism, violence, and greed, is also as ever unchanged. There exist economic incentives for technology to barrel ahead, to make new things possible, to make our lives easier, to enable new weapons; yet there do not exist similarly powerful incentives for careful reflection over what such advances will reap. Most importantly, there do not exist similarly powerful incentives for improving human morality. Ask yourself this: What has been more quickly adopted — cell phones, or equal treatment for sexual and racial minorities? Drone warfare, or the concept that the value of a human’s life does not depend upon its country of birth?

The main thesis is that it may be possible to apply science and technology to understand and improve human morality, and that this is a most critical human endeavor. Tinkering with morality itself is a weighty undertaking, and requires exceeding care and caution; however, if there were a medicine without side effects that could improve my ability to empathize with others, I would gladly take it. I am not advocating for some sort of misguided utopia, but a rational effort to aid overcoming our out-dated antisocial urges. Human fallibility and weakness are well-documented, both scientifically and historically, and such fallibility is largely the cause for systemic human suffering: It underlies genocides, world hunger, and poverty.

While technology offers the potential to increase human moral abilities, problematically there exists little economic incentive for such a research program currently; or at least, no incentive proportionate to its importance. Indeed, increased moral awareness could undermine consumerism, as a more caring human likely would more carefully weigh the price of an expensive cup of coffee against the real impact similar money could have on a human life in dire circumstances. This is where charity and passion are most needed: Where human progress and economic drivers clash.

The critical importance of timely focused research into improving morality can be illustrated by a simple concept called path dependence. The main idea is that history matters. In particular, the ordering of certain key events can determine the outcome’s quality. For example, imagine that it is inevitable that at some point technology will advance to the point that it becomes elementary for anyone to construct a massively destructive weapon. Knowledge has a tendency to leak, and technology has a tendency to render the previously impossible possible, so this is not an absurd possibility; that is, the mechanism by which a nuclear bomb operates is common knowledge (although luckily its construction still requires specialized equipment and materials). Imagine also, that another event is also inevitable given enough research: Uncovering a deep understanding of human morality and how to improve it. Now, you can see that the ordering of these two discoveries may critically influence the outcome of this imagined history. If the terrible weapon is discovered first, some deluded selfish individual will likely deploy it resulting in horrific casualties; we can call this outcome the morality apocalypse. However, if first humanity can remedy its morality on a large scale, the terrible weapon becomes less terrible.

I admit that this argument is speculative — and that it is light on the exact research trajectories that should be pursued (some thoughts deeper than mine are provided by Ingmar Persson and Julian Savulescu, and there are several articles by others as well describing “Moral Enhancement”). At the same time that this argument is speculative, it is hard to ignore the blazing success of technology, largely driven by economic interests, and in comparison, the relative stagnation of our own morality.

While we can and do romanticize the human condition and our poetic faults, it is harder to romanticize the terrible acts humans inflict upon humans as a result. I truly believe that technology can yet be the linchpin for human flourishing, but perhaps only if applied delicately to augment the quiet Jimminy Cricket on our shoulder, the one who we too easily can brush aside when pursuing our own interests at the expense of others, both here among our closest companions, and abroad among those who suffer but we have never met.

This cause, of improving morality through scientific or technological means, I believe is the greatest leverage point for charity to impact the world of our grandchildren, and I ask you to consider allocating some of your future funds towards research in the area.

With respect,

Joel

Joel, valiant attempt at writing about morality without bringing God into the picture. It is however impossible to do so. When all is said and done, one will find out – the hard or the easy way – that all morality comes from God, and more specifically, Jesus Christ and the work He accomplished at the cross. As to why Bill breaks out into a rash of hives when he hears the mention of His name, here’s an article that sums up Bill Gates’ God-less views on morality and his own soul.

Coal to Newcastle. Bill is already doing a great job of focusing on real issues such as malaria.

That said, I’ll betcha a dollar he’ll hire you to start a program on morality improvements. Good luck.

Great thoughts Joel. I have been thinking about few of the things you mentioned in your blog lately and I totally agree with you. If we won’t address these problems soon, in time we will surely face consequences (Morality Apocalypse), in-fact we are facing few of them right now.

Mr. and Mrs. Gates is doing great work for humanity and I think if everyone come together we can build a better world for tomorrow.

Happy to discuss in detail.