I recently bought AT&T DSL and documented their frustratingly over-engineered registration process. In short: a simple web-form system shouldn’t end up installing Internet Explorer 8 for you. I think it’s interesting particularly because no lean startup would ever ever do this in a million years, yet we aren’t particularly surprised when a big company is flushing dollars down the toilet in this way.

When AT&T activated my service and I received the DSL modem and a manual in the mail, I assumed it would be a quick five-minute process. Configuring the modem itself was straightforward and went smoothly. When the modem is configured, you pull up a browser, which will automatically redirect to an AT&T registration page.

The landing page was a little too flashy, it automatically plays some audio instructions in the background, basically telling you to hit the “next” button. They inform you that you need to supply some information to AT&T and in return they will give you a username and password to add to the modem’s configuration, which will allow you to get to the internet. The obvious approach is a simple HTML web form.

Instead, when I hit next, there is a five minute pause as AT&T’s website “checks my system.” Why this takes five minutes is beyond me. It then complains that my browser is not supported — well, I use chrome on ubuntu, which is an obscure setup, so fair enough, I’ll switch to Firefox. Wrong. It requires an ActiveX control (a way to run native code on my machine, also, who still uses ActiveX? Its 2011, does AT&T also send smoke signals from one department to another to announce the results of their fancy abacus calculations?). This means I must find a windows box and use Internet Explorer. Why? For a web form? Ridiculous, but okay, another hoop to jump through.

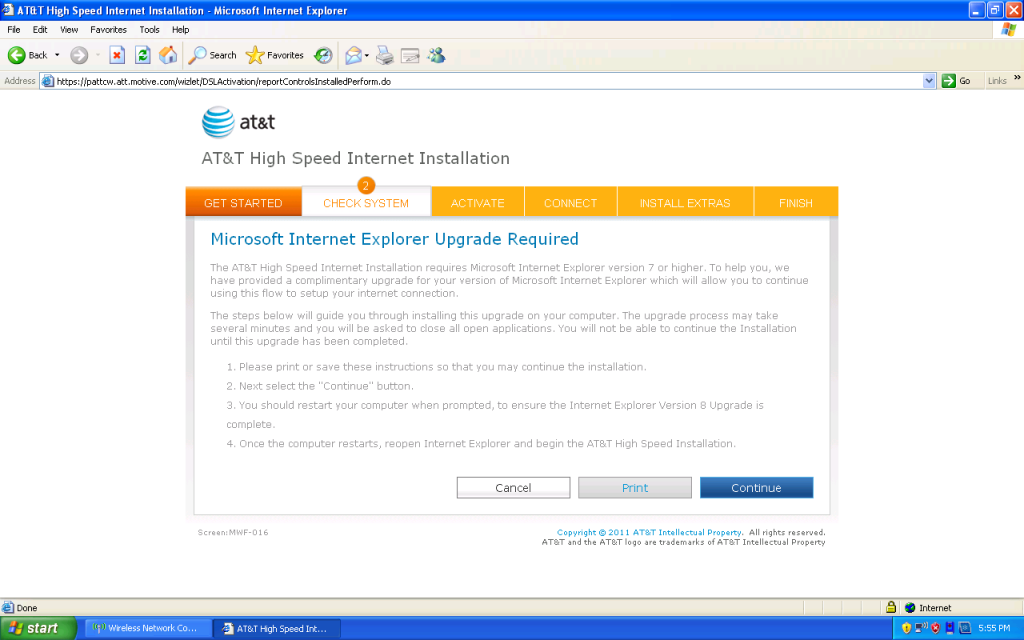

So, I boot into an old computer that is running Windows XP. I launch whatever version of IE is on it which has never been used, endure the flashy landing page again, wait another five minutes for the website to “Check My System,” and then wait another five minutes for it to download and run some sort of ActiveX control. I’m getting frustrated by this point, because what should be just a simple platform/browser independent webform has turned into some kind of ridiculous circus. Now, the ActiveX controller informs me that my version of IE isn’t good enough!

I’m livid. But luckily the ActiveX controller will download a new version of IE for me! Well, that is a nice touch I guess, although ridiculous in its own way; a simple web form now necessitates complicated contingency considerations.

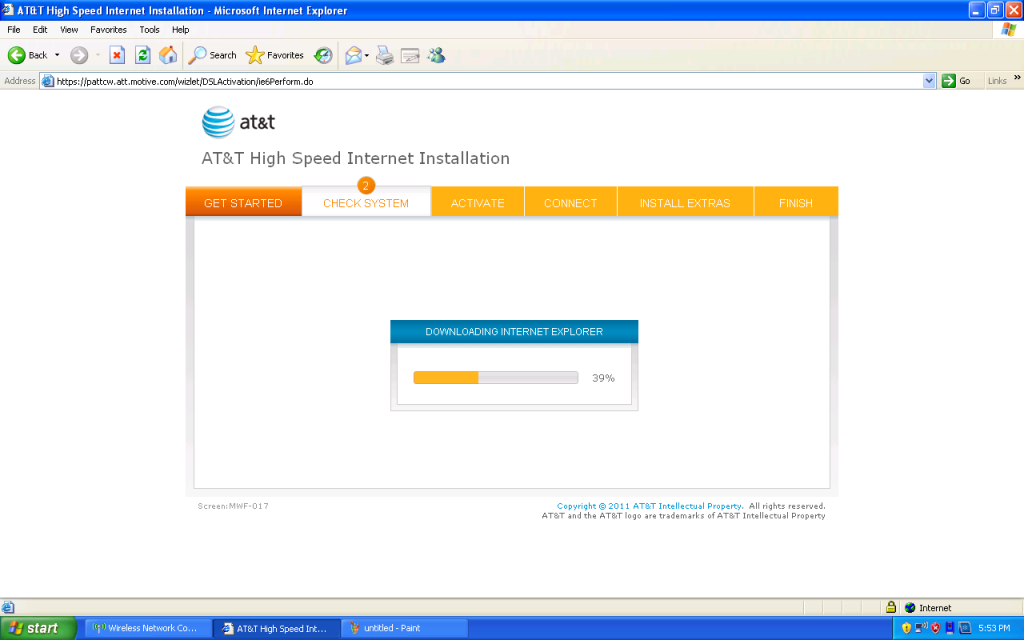

Not inspiring confidence in the speed or consistency of my newly acquired broadband connection, it takes forever to download the new browser version and freezes once or twice. When it freezes, I have to restart the browser and re-endure the five-minute checking process before the ActiveX controller chides me for my ancient version of IE.

Finally it’s done. It warns me before it installs IE 8 that ATT may also include some bundled crapware (I didn’t notice any though). Then it installs. And reboots my computer. And then I go to the flashy landing page, wait five minutes for it to analyze my system, five minutes for the activeX control to load.

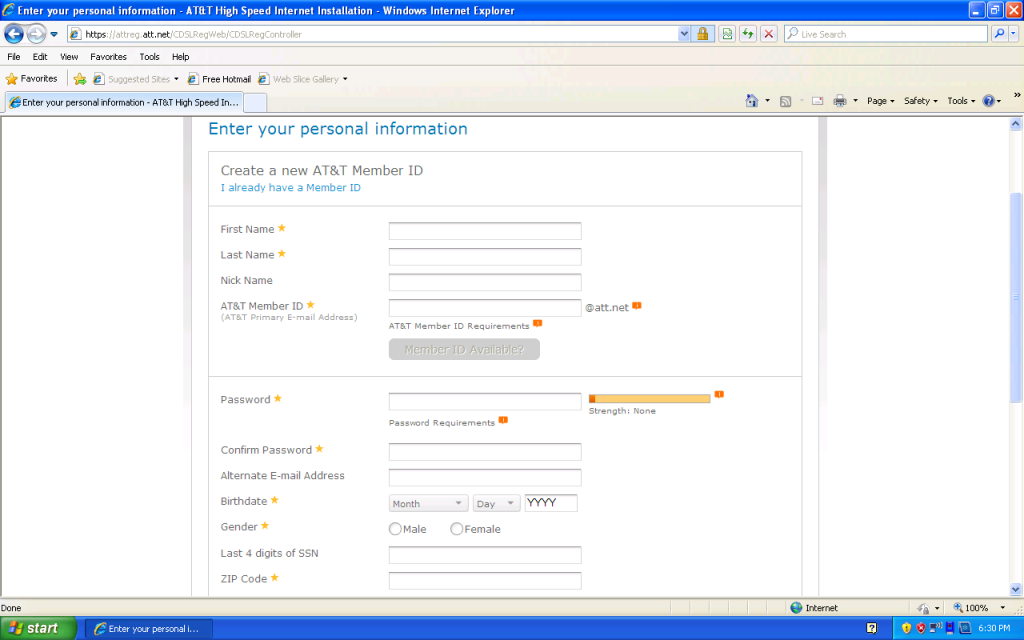

AND FINALLY…WHAT MAGICAL DELIGHTS AWAIT ME…WHAT WONDERS NECESSITATED FINDING AN XP BOX, INSTALLING AN ACTIVEX CONTROL, DOWNLOADING AND INSTALLING A NEW VERSION OF IE, REBOOTING, ETC…??

Oh. It’s a web form. Cool.

Now: Would DropBox do something this silly? 37 signals? Even large tech companies like Amazon and Google wouldn’t. But somehow at AT&T some bloated process or disconnect between management and engineers resulted in an wasteful, irrational implementation.

Update: Wow, didn’t think this would hit #1 on hackernews.com, and want to exploit this incoming traffic, so go look at http://endlessforms.com/, a colleague Jeff Clune has launched this cool research-related site where you evolve 3d objects interactively and they can be printed out on a 3D printer.

Also, an anonymous commenter from the hackernews thread illuminates the true reason for the IE/ActiveX dependence:

What is called AT&T now is actually SBC, a Baby Bell with a penchant for out-sourcing. SBC bought Cingular, AT&T, Pacific Bell, lots of other companies. Their AT&T purchase was motivated in part by the name: everyione has heard of AT&T.

SBC brought with them metric tons of bureaucracy, all running in IE. Disgusting. It’s not just the external web interfaces. We have to deal with this BS internally, too. 1990s web interfaces that only work in IE (sometimes requiring 7, sometimes requiring 6) for every interaction with corporate. Taxes, mandatory training, time reporting, everything.

We have to grab a spare Windows machine or run a VM with XP in it. Most of the tech side of company knows and hates the whole thing. The impenatrable bureaucracy makes it impossible to find out who to complain to. There is no escape. The article is dead-on about what’s wrong, and I know first-hand, because we have to eat that dog food weekly.

The true cause of the crufty web-form is more insidious and historical than an outsider might intuitively guess. It is incredible how in less than three hours a single article can actually ferret out a strange nugget of truth.