One reason I love linux is the wide array of packages that can either be instantly installed from the command line or easily downloaded from third party sites. While this doesn’t necessarily distinguish linux from windows, the linux ecosystem is designed for power users. With Linux you can quickly cobble together an impressive tool from wide-ranging components using a scripting language as glue.

Because the system of components available for linux has reached a critical mass (just about anything you might need probably exists), the things you can accomplish in four hours of tinkering are surprising. For example, in this post I detail a tool I made that can investigate whether our literature is getting dumber.

The Problem: Monotonous Word Counting

For a research project I was looking to estimate word counts of popular books and their readibility (i.e. is a particular book written for a highly educated audience or the masses?). One characteristic of hackers is that they are lazy in a special sense: they generally dislike drudging intellectually-void work like manually counting words on a page. So, I investigated automated alternatives.

Bookalyzer: Scraping Google Books Previews

I noticed that excerpts from many books are available through google books. However, the text is not copy/paste-able (i.e. it is an image rendered through javascript). In order to automatically analyze a book’s contents I would need to convert the images into machine-friendly text, not a simple task.

Whimsically and unrealistically, I thought it’d be great to have a tool that would: 1) control a web-browser to use google books, 2) go to an arbitrary page, 3) take a screenshot, 4) run the screenshot through OCR (optical character recognition) and 5) analyze the resultant text. It seems like an ambitious ungainly project, especially since I hadn’t had any experience with browser automation or OCR tools.

Naturally, my initial rational impulse was to give up and just do the work manually. Luckily though, my allergy to dull work prevailed. In fifteen minutes of web searching, a somewhat realistic plan had congealed:

1. From the title of the book, query the google books api to determine an ISBN

2. Feed the ISBN into a html document that uses the google books embedded viewer api to zoom in and scroll a few pages in to reach a representative page (hopefully full of text).

3. Use selenium to drive firefox to render the html document and take a screenshot

4. Take the screenshot, do some cropping and basic segmentation with imagemagick to isolate the text

5. Translate the image into text through tesseract, an open-source OCR engine that is fairly good.

6. Finally, use the page count and the words on this particular page as a (admittedly rough) estimate of the book’s word count, and calculate a basic readibility metric

Surprisingly, this plan worked; I was able to implement a basic functional version of the tool in about four hours. I’ve posted my code on git-hub if you would like to leapfrog from it, although it is a hack. I’m calling it bookalyzer.

Dummy Test: Does It Measure Anything

The text gets a bit garbled from the OCR; it isn’t perfect. Also, sometimes the page you choose from the google books sample might not be representative of the whole book (e.g. perhaps you choose a particularly dense page). Both of these points imply that there will be some noise in the readibility measure.

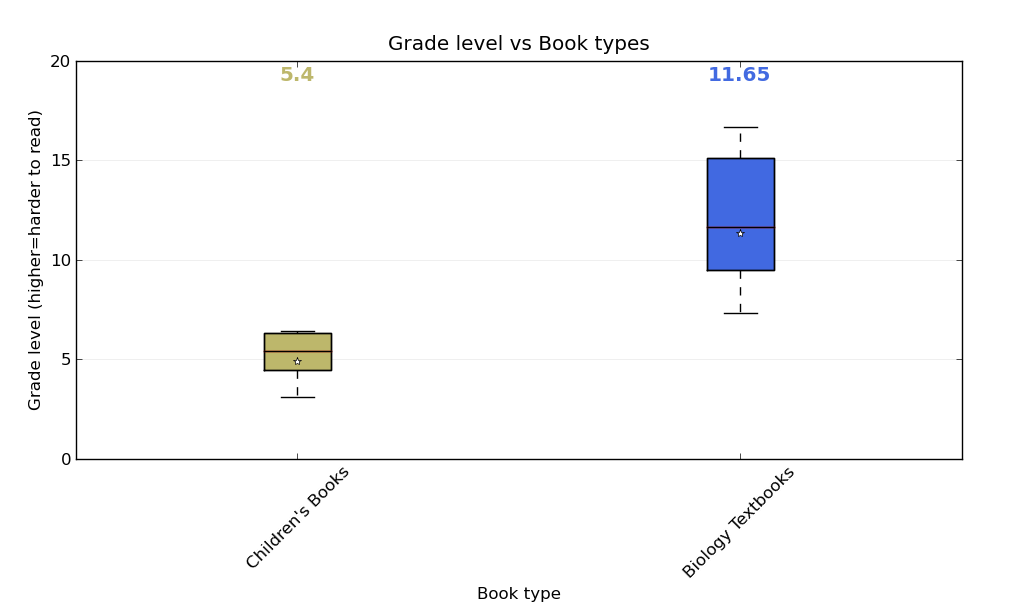

So, to test whether bookalyzer measures anything at all, I ran the tool on a set of biology textbooks and on children’s fiction (a selection of R.L. Stein’s Goosebumps series, for those that remember). The question was whether the bookalyzer tool could differentiate the reading levels of these two sets. Here is a plot of the resultant readibility scores:

You can see that there is a clear difference: The children’s books have an average readibility of around 5th grade, while the Biology textbooks float around an 11-12th grade reading level. So, despite the noise, the readibility test run on an OCR’d page of a google-book preview has some measuring power.

Fun Application: Are Books Getting Dumber?

It seems somewhat believable that best-selling books might be getting dumber over time. We think of past generations as possibly more well-read, and of the current generation (perhaps unfairly) as celebrity-gossip-seeking Jersey-shore-watching idiots. Perhaps every generation believes the next is stupider.

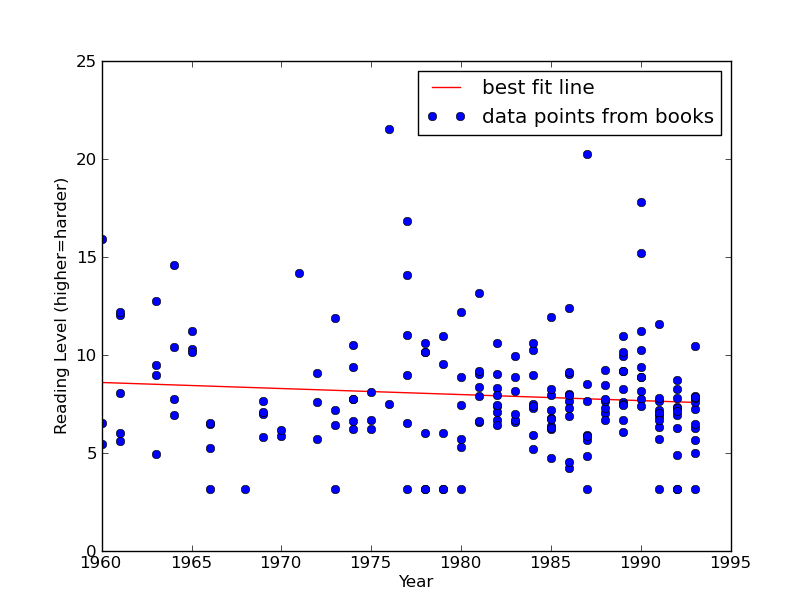

Interestingly, given the ability to estimate the readability of any google-previewable book and a list of best-selling books over a few decades, one can actually investigate this claim (albeit on a superficial level). I grabbed a list of bestselling books going back a few decades from a class website and ran the readibility tool. Here are the results:

The data does suggest a slight downwards trend (-0.03 grades per year), although the correlation was weak (-0.09) and the p-test was not significant (0.18). In other words, we might be getting dumber, but the data is not conclusive.

The data does suggest a slight downwards trend (-0.03 grades per year), although the correlation was weak (-0.09) and the p-test was not significant (0.18). In other words, we might be getting dumber, but the data is not conclusive.

Someone could probably do a better job either (and probably someone already has) if they 1) work at google and have full access to the text of all these books, or 2) using the ngram dataset dataset from google (http://ngrams.googlelabs.com/datasets) in a clever way with a word-based readibility metric.

Conclusion

Powerful open platforms like Linux and freely-available internet APIs (like google books) organize into a critical mass that potentiates a limitless swathe of unforeseen applications. What strange magic can you bring to life in four hours?