Celery is a distributed task queue that integrates smoothly with python. I stumbled upon it and immediately saw it as a cool technology looking for an application. You can define tasks in python by just adding a decorator (@task) that can be performed in the background asynchronously by hungry worker compute nodes. For example, here is a sample (simple) task gratuitously lifted from the basic celery tutorial:

@task

def add(x, y):

return x + y

You can then call the task with its “delay” method and it will be serialized, whisked away to Celery’s backend queue, unserialized by a worker, computed, and then the result will be ferried back to the python caller:

>>> from tasks import add >>> add.delay(4, 4) >>> result.get() 8

What is especially nice about this is how seamless it is. If your prior exposure to parallelization is something like MPI in C++, the simplicity and elegance of celery is refreshing. It just works, and for expensive parallel tasks it is a nice way of circumventing the GIL in python.

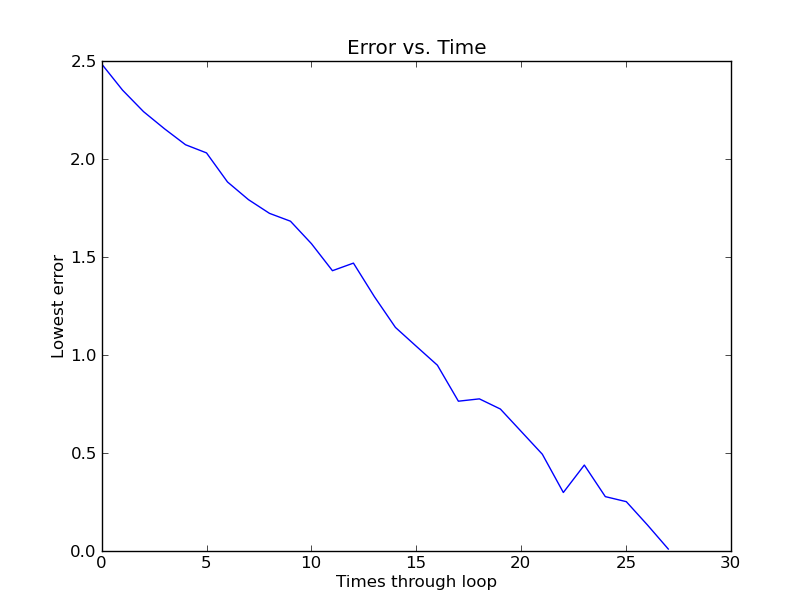

My PhD research often involves genetic algorithms. The idea is that you have an array of artificial genomes (which are themselves often lists of numbers) and a way of judging these genomes (an error function of some kind). Iteratively you apply the error function to weed out the worst of these artificial genomes, and replace those worst genomes with slight variations of the better ones. The hope is that over time you can find a genome that minimizes the error function (usually called the fitness function in GA parlance).

Usually the performance bottleneck in GAs is evaluating each genome through the fitness function. For example, sometimes this evaluation can consist of expensive physics simulations (e.g. simulations of virtual creatures).

What is nice from a parallelization standpoint, is that each simulation is done on a per-genome basis and is (usually) independent of every other genome. So if you had a population of 1000 genomes that you need to evaluate, you can create a celery task for each of them that can be offloaded to either spare cores on the same computer or onto worker instances on other computers.

Of course, celery will be handy not only for GAs but wherever you have many expensive tasks that are independent of each other. For example, rendering individual frames of a ray-tracing animation or multiplying many large matrices. If you can decompose your computational bottleneck into significant independent chunks, Celery may be a good fit for easily reaping the benefits of parallelization.

For the genetic algorithm application, I just had to wrap the fitness function as a celery task:

@task def evaltask(domain,genome): fit=domain.evaluate(genome) return fit

And then I augmented the serial evaluation of the genomes (shown here):

#evaluate sequential if(not eval_concurrent): for k in population: k.fitness = dom.evaluate(k)

With the parallel evaluation that uses the celery server:

#else evaluate concurrently else: tasks=[] for ind in population: tasks.append(evaltask.subtask((dom,ind))) job = TaskSet(tasks=tasks) result=job.apply_async() fits=result.join()

What this code does is make a list of celery subtasks, one for each genome to be evaluated. It composes this into a larger “taskset,” which is run asychronously, and the results are gathered (in order) by the join call once they all complete. If each task is non-trivial (i.e. it takes significantly longer to run than the overhead associated with serializing it and throwing it to celery), then the speed-up gained from paralellization can be very nice.

Feel free to check out the complete source on github (including the celeryconfig.py file that).

Using concurrency requires a working celery install (see http://www.celeryproject.org/tutorials/first-steps-with-celery/ for how to run celery, it is fairly simple to install):

You can install Celery either via the Python Package Index (PyPI) or from source.

To install using pip:

$ pipinstallCeleryTo install using easy_install,:

$ easy_install Celery

And a backend for celery is necessary for it to run. The default is rabbitMQ, available on ubuntu as package: rabbitmq-server